【统计学论坛】Contrastive Divergence Algorithm Converges

Title (题目): Contrastive Divergence Algorithm Converges

Time (时间): 13:30 – 14:30, 2015-12-28 (Monday)

Location (地点): 伟清楼209 (Center for Statistical Science, Tsinghua University)

Speaker (报告人): Bai Jiang, Stanford University

Abstract (摘要):

Maximum Likelihood learning of Markov random fields is challenging because it requires estimates of complicated integrals or sum of an exponential number of terms. Markov Chain Monte Carlo methods can give an unbiased estimate, which converges after a large number of steps. Geoffrey Hinton (2002) proposed Contrastive Divergence (CD) learning algorithm, which performs only a few steps of Markov chain transitions in MCMC but surprisingly works well. Although CD learning has been widely used for Deep Learning tasks, there is little theoretical result about the convergence property of this algorithm. Our work proves the convergence rate of CD algorithm in exponential family by a nice connection between Lyapunov drift condition and Harris recurrent chain.

Keywords: Deep Learning, Contrastive Divergence, Convergence Rate, Lyapunov Condition, Harris Recurrent Chain

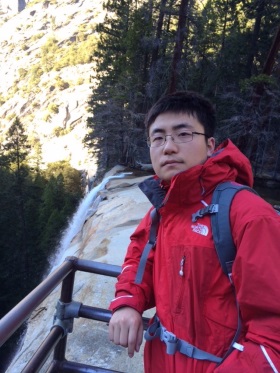

About the speaker (报告人介绍)

Bai Jiang got his Bachelor’s and Master’s degree in Department of Automation, Tsinghua University and is now a senior PhD student working with Prof. Wing Wong at Department of Statistics, Stanford University. His research interest lies in Bayesian computation, machine learning and random optimization algorithms.

Homepage: www.stanford.edu/~baijiang